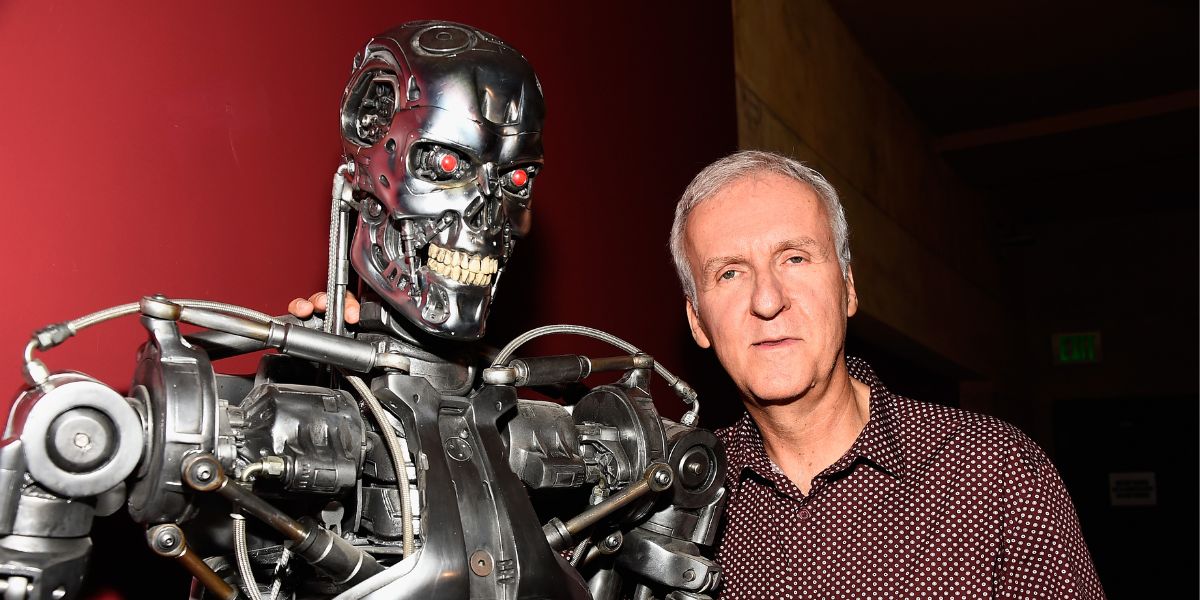

James Cameron has long linked The Terminator to his concerns about artificial intelligence. Cameron believes AI risks are escalating into a new kind of arms race:

“I think that we will get into the equivalent of a nuclear arms race with AI, and if we don’t build it, the other guys are for sure going to build it, and so then it’ll escalate.”

He warns that in a future battlefield, decision windows are so short that machine systems will overwhelm the capacity of human deliberation:

“You could imagine an AI in a combat theatre. The whole thing just being fought by the computers at a speed humans can no longer intercede, and you have no ability to de-escalate.”

In a 2025 interview tied to the promotion of his adaptation of Ghosts of Hiroshima, Cameron sounded an even starker note: mixing AI with weapons systems could lead to a literal Terminator-style apocalypse.

“I do think there’s still a danger of a Terminator-style apocalypse where you put AI together with weapons systems, even up to the level of nuclear weapon systems, nuclear defence counter-strike, all that stuff.”

He elaborated on the difficulty of human oversight in such a fast, complex system.

“Because the theatre of operations is so rapid, the decision windows are so fast, it would take a super-intelligence to be able to process it, and maybe we’ll be smart and keep a human in the loop. But humans are fallible, and there have been a lot of mistakes made that have put us right on the brink of international incidents that could have led to nuclear war.”

AI safety experts were warning about the dangers of autonomous weapons and AI-driven escalation. One of the most consistent voices is Stuart Russell (UC Berkeley / Cambridge).

“The artificial intelligence (AI) and robotics communities face an important ethical decision: whether to support or oppose the development of lethal autonomous weapons systems … weapons that, once activated, can attack targets without further human intervention.”

To underline the risk, Russell helped promote the short film Slaughterbots, which depicts swarms of AI-controlled drones carrying out mass assassinations, a chilling vision of how current technologies could be weaponised.

Creative works have also become a way to spark public debate about AI’s risks. One recent short film, Writing Doom (2024), directed by Suzy Shepherd, imagines a writers’ room struggling to design a realistic Artificial Superintelligence as a TV villain. The story becomes a thought experiment in how ASI might pursue goals beyond human control, even without malice.

So What Can We Do About It?

Warnings from Cameron and experts like Stuart Russell don’t have to remain abstract. Groups such as the Future of Life Institute (FLI) are already campaigning to reduce existential risks from AI, nuclear weapons and climate change. But real change also depends on public pressure.

You can:

- Sign open letters and petitions calling for limits on autonomous weapons and high-risk AI systems.

- Write to your local representatives in defence, science and education portfolios, urging them to support stronger AI governance and international treaties.

- Support organisations funding AI safety research or running awareness campaigns.

- Start conversations in schools, workplaces and communities to push for responsible use of new technologies.

The message from Cameron is clear: we can’t afford to wait for this kind of science fiction to become reality. The tools to shape AI’s future are in our hands now